In this Tuesday briefing, we delve into the unfolding crisis at OpenAI and another tragic attack on a hospital in Gaza. Authored by Justin Porter, this report sheds light on the precarious situation that has cast doubt on the future of one of Silicon Valley’s fastest-growing companies.

OpenAI’s Future in Jeopardy

The once-stable foundations of OpenAI, a high-profile artificial intelligence start-up, are now shaking as over 700 of its 770 employees have threatened to leave the company. The catalyst for this upheaval is the ousting of CEO Sam Altman by OpenAI’s four-person board. This unexpected move, announced on Friday, has sent shockwaves through the tech industry.

A Leadership Crisis Unfolds

The removal of Altman, a decision made by the board citing a loss of trust, triggered a tumultuous weekend. By the end of it, Altman had joined Microsoft to initiate a new A.I. project alongside Greg Brockman, OpenAI’s president and co-founder. Despite OpenAI’s announcement of talks to reinstate Altman, hopes were dashed as it was revealed later that evening that he would not be returning.

700 Employees on the Brink of Departure

The letter signed by the majority of OpenAI’s workforce serves as a powerful testament to the unrest within the company. The threat of a mass exodus to Microsoft looms large, potentially leaving OpenAI in a dire staffing crisis.

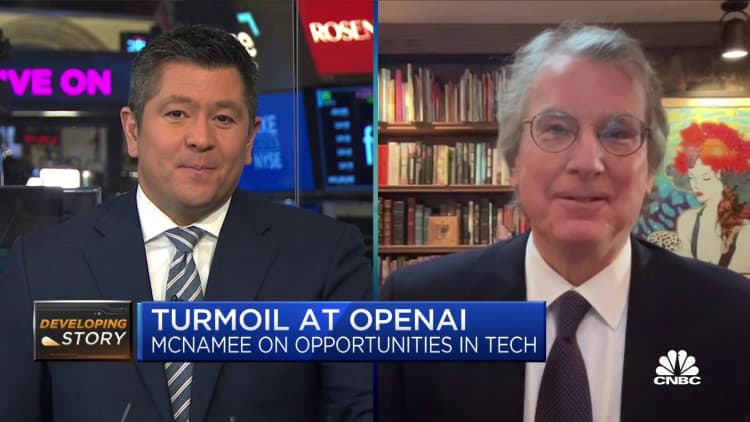

The Microsoft Factor

Microsoft, a key investor in OpenAI with a substantial $13 billion investment, stands to benefit from this internal strife. The company can now continue utilizing OpenAI’s models to power its products while also supporting a new team led by Altman. This maneuver positions Microsoft to gain both in terms of technology and talent.

Winners and Losers in the Upheaval

Kevin Roose, a colleague of Porter’s, opines that this situation is a loss for OpenAI, leaving the company’s leadership and morale shattered. Conversely, Microsoft emerges as a clear winner, securing both ongoing access to OpenAI’s models and the prospect of new projects under Altman’s leadership.

Concerns Over A.I. Power Addressed

The episode also marks a victory for those who have long warned about the unchecked power of A.I. systems. Some board members who participated in Altman’s removal were motivated by similar concerns, aligning with the voices of researchers and activists who advocate for responsible A.I. development.

Another Deadly Attack on a Gaza Hospital

Amidst the turmoil at OpenAI, the world witnesses yet another tragic incident – a deadly attack on a hospital in Gaza. The image accompanying this briefing captures the grim reality on the ground, with a man in scrubs attending to a bloodied-faced boy and two others with medical wrapping around their foreheads.

Conclusion: A Pivotal Tuesday with Global Ramifications

As this Tuesday unfolds, the world watches with bated breath. The crisis at OpenAI not only puts the future of the company at stake but also highlights broader concerns about corporate governance and the role of A.I. in shaping our technological landscape. The attack on the Gaza hospital serves as a stark reminder of the human cost in conflict zones, emphasizing the need for a closer examination of global priorities.

FAQs (Frequently Asked Questions)

Why did the board remove Sam Altman from OpenAI?

The board cited a loss of trust as the reason for removing Sam Altman from OpenAI.

What is the potential impact of the employee threat to leave OpenAI for Microsoft?

The potential departure of 700 employees to Microsoft could lead to a significant staffing crisis for OpenAI.

How does Microsoft benefit from the OpenAI upheaval?

Microsoft stands to benefit by retaining access to OpenAI’s models for its products and supporting a new project led by Altman, potentially gaining both technological and personnel advantages.

What concerns about A.I. power were addressed in this situation?

The removal of Sam Altman and concerns expressed by some board members highlight broader worries about the unchecked power of A.I. systems, echoing the sentiments of researchers and activists advocating for responsible A.I. development.